Over the past years, the author of the cURL project, [Daniel Stenberg], has repeatedly complained about the increasingly poor quality of bug reports filed due to LLM chatbot-induced confabulations, also known as ‘AI slop’. This has now led the project to suspend its bug bounty program starting February 1, 2026.

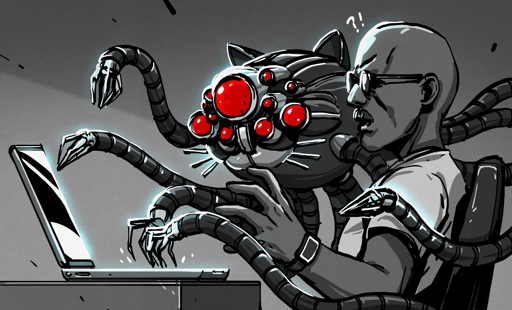

Examples of such slop are provided by [Daniel] in a GitHub gist, which covers a wide range of very intimidating-looking vulnerabilities and seemingly clear exploits. Except that none of them are vulnerabilities when actually examined by a knowledgeable developer. Each is a lengthy word salad that an LLM churned out in seconds, yet which takes a human significantly longer to parse before dealing with the typical diatribe from the submitter.

Although there are undoubtedly still valid reports coming in, the truth of the matter is that the ease with which bogus reports can be generated by anyone who has access to an LLM chatbot and some spare time has completely flooded the bug bounty system and is overwhelming the very human developers who have to dig through the proverbial midden to find that one diamond ring.

We have mentioned before how troubled bounty programs are for open source, and how projects like Mesa have already had to fight off AI slop incidents from people with zero understanding of software development.

From Blog – Hackaday via this RSS feed

The problem isn’t AI, it’s that if you offer a reward you’ll get a ton of people fishing for money for the lowest possible effort, and AI enables them to throw shit at the wall hoping it makes a Mona Lisa. It won’t, ever.

I don’t know what the answer is, but the problem is awful people, as usual.