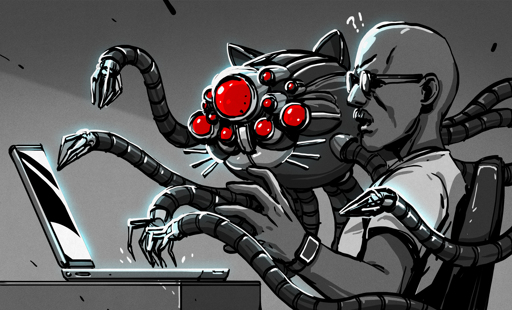

Recently [Faith Ekstrand] announced on Mastodon that Mesa was updating its contributor guide. This follows a recent AI slop incident where someone submitted a massive patch to the Mesa project with the claim that this would improve performance ‘by a few percent’. The catch? The entire patch was generated by ChatGPT, with the submitter becoming somewhat irate when the very patient Mesa developers tried to explain that they’d happily look at the issue after the submitter had condensed the purported ‘improvement’ into a bite-sized patch.

The entire saga is summarized in a recent video by [Brodie Robertson] which highlights both how incredibly friendly the Mesa developers are, and how the use of ChatGPT and kin has made some people with zero programming skills apparently believe that they can now contribute code to OSS projects. Unsurprisingly, the Mesa developers were unable to disabuse this particular individual from that notion, but the diff to the Mesa contributor guide by [Timur Kristóf] should make abundantly clear that someone playing Telephone between a chatbot and OSS project developers is neither desirable nor helpful.

That said, [Brodie] also highlights a recent post by [Daniel Stenberg] of Curl fame, who thanked [Joshua Rogers] for contributing a massive list of potential issues that were found using ‘AI-assisted tools’, as detailed in this blog post by [Joshua]. An important point here is that these ‘AI tools’ are not LLM-based chatbots, but rather tweaked existing tools like static code analyzers with more smarts bolted on. They’re purpose-made tools that still require you to know what you’re doing, but they can be a real asset to a developer, and a heck of a lot more useful to a project like Curl than getting sent fake bug reports by a confabulating chatbot as has happened previously.

From Blog – Hackaday via this RSS feed

In the last example the one thing that AI really should be doing to have more people trust it is a confidence score for each answer, based on a separate meta-analysis of the data it has available to it vs. its response. The code testing tool kind of analyzes itself to see which issues it brought up are likely hallucinations.

AI is more useful for the “looking for anything potentially worrying out of a big set” than the “just rewrite the code for me and make sure it has no issues and improved performance”. The former it’s fine to not have accounted for anything but the latter, one has to ensure there are no ensuing regressions which are more likely the bigger the patch.

The LLM doesn’t know how confident it is, and even if you ask it, it’s just going to pick whichever number came up most often when people asked each other how confident they were in the model’s training data. That’s still an unsolved problem beyond basic tasks like getting an LLM to find sentences in some prose that aren’t supported by any of the sentences in some other prose.

LLM is a functionally an averaging algorithm, I think it’s reasonable to calculate statistical parameters behind its answers using other algorithms. Something akin to the z-value of an answer on a Gaussian distribution, or the standard deviation indicating how spread the potential answers are.

I feel like you need to know more about how LLMs operate before you can really be lecturing other people about what they are and how they could be improved.

As far as needing to have an “awareness” of how confident they are in the answer and communicate that data point along with the answer itself, yeah that sort of thing would be 1,000% improvement, it would be wonderful. You are right about that part but your diagnosis of the details needs some work.

Sorry if I am hand-waving away too many details on the implementation, but I’m glad you get my point.

Yeah, it is a really good idea. The sticking point is that the modern structure of LLMs really doesn’t allow it, it is not that they haven’t tried. But at least so far, whatever secondary structure you try to apply to check the first answer suffers from the exact same issue, that it doesn’t really “understand” and so it’s subject to spouting totally wrong stuff sometimes no matter how carefully you try to set it up with awareness and fact checking.

That’ll tell you how likely it is that an answer’s gramatically correct and how likely it is to resemble text in the training data. If the LLM starts reciting a well known piece of literature that shows up several times in the training data and finishes it correctly, that’ll give a really good score by this metric, but no matter how much the LLM output resembles the script of Bee Movie, Bee Movie isn’t a true story or necessarily relevant to the question a user asked.