Photo-Illustration: Intelligencer

OpenAI’s Sora, the TikTok-like video generator app, was an instant hit, shooting to the top of the download charts and hitting a million installs within a few days of launch. Its early popularity makes sense: It’s a free chance to try recent video generation technology, a fun way to joke around with friends who consent to being included in one another’s clips, and an early look at the weird dynamics of celebrity-endorsed AI.

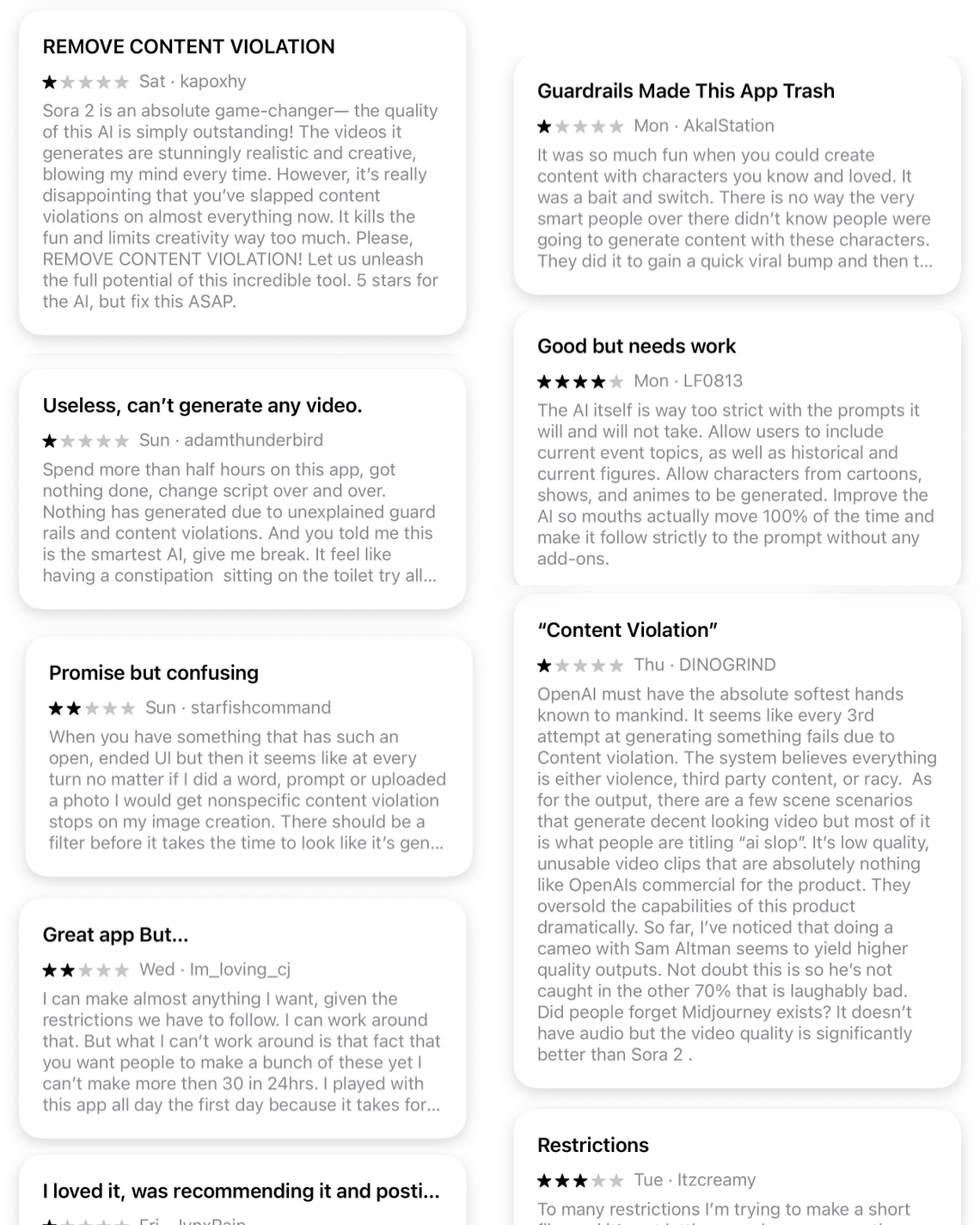

Judging by App Store reviews, though, it seems like people aren’t entirely happy with Sora. Some of the complaints dragging on its rating — it’s at 2.8 stars out of 5, compared to 4.9 for ChatGPT or 4.6 for Google — are straightforward and easily addressed: it’s sort of glitchy; the voices aren’t as good as they could be; some generations are uncanny or unconvincing; the app, despite being free, is invite-only, which can make for a disappointing download. But the most popular complaint by far is one that OpenAI can’t easily deal with. It’s that the app has rules:

Photo: Apple App Store

Running into Sora’s “guardrails,” as the company explicitly calls them, is a frequent experience if you’re using the app to, say, goof off with your friends. (The app’s public feed provides plenty of evidence that people are using this app to tease and embarrass their friends and the few public figures who have given permission for their likenesses to be used, like Sam Altman.) Given that the main early use case seems to be jokes — many of which are just variations on puppeteering others into saying or doing unexpected or odd things — I can imagine that encountering these guardrails is a pretty common experience.

I can also imagine why a company like OpenAI would set a huge number of limits on a social media app built around deepfakes*,* which are elsewhere associated with porn, harassment, and misinformation. Obviously, OpenAI is going to try to prevent people from using Sora as nudifying app, or from generating violent imagery of children. But such a company also has pretty clear reasons to be careful with celebrity likenesses, copyrighted material, especially as it lobbies for a more lenient legal environment and deals with public and legal backlash. Sora’s “guardrails” are indeed high and conservatively placed, but plenty of users, upon seeing their own faces and bodies reproduced as realistic, playable digital avatars, can probably figure out why.

Still, plenty of others experience such limits as a simple deficiency: Clearly, this model is capable of generating material that it won’t, which amounts to OpenAI telling users what they can and can’t do or see. ChatGPT, which has plenty of guardrails of its own, frequently draws similar complaints from users, as does the nominally more permissive Grok, but the rate of outright refusals from Sora is a sore point among reviewers. One factor that might be contributing to this, as reported by Katie Notopoulos at Business Insider, is the emerging culture and demographics of the young app:

As more and more people join the app, I’m starting to see them making cameos of what appear to be their real-life friends. (There are lots of teenage boys, it appears.) On one hand, teenage boys and young men are a great demographic for your nascent AI social app because they like to spend a lot of time online. On the other hand, let’s think about platforms that were popular with teenage boys: 4chan, early Reddit, Twitch streaming …. these are not necessarily role models for Sam Altman’s OpenAI.

And then there’s the Jake Paul of it all. Jake Paul is pretty much the only recognizable celebrity who lets anyone create cameos on Sora with his face — and people have gone wild… Right now, a big meme on Sora is making Jake Paul say he’s gay — with him wearing a pride flag or dressed in drag. Har har har. (I’ve started to see this bleed out into non-celebrity users, too: teen boys making cameos of their friends saying they’re gay.) Paul has responded with a TikTok video about the meme, acknowledging it and laughing about it.

As Notopoulos points out, the app’s feeds feature few women while its emerging sensibilities skew young and male. (This could obviously change or broaden out if Sora keeps growing, but for now it seems to be intensifying; beyond the already skewed demographics of AI early adopters, women have plenty of other reasons to be wary of an industrial-scale deepfaking app.) If Sora’s ratings are anything to go by, the people driving its early growth don’t have much sympathy for the complicated situation OpenAI has created for itself — fair enough! — or concern for how an unmoderated TikTok full of deepfakes might go wrong for others. They open a video generator, enter the first prompts that the app brings to mind, and get told no, imprecisely, for a variety of reasons: Sorry, that one’s copyrighted; of course we can’t render a video of your friend firing a gun into a crowd; our apologies, but that request is pretty clearly sexual. Try again!

Nah I need to know what Jake Paul thinks about these Sora AI videos 😩 pic.twitter.com/hfV7ZqsV8k

— Queenie ✌🏾🎀🇯🇲🇬🇧 (@Queenie_2312) October 7, 2025

OpenAI has released another popular app, in other words, but finds itself immediately at odds with its most eager early users: young men who want to make gay jokes about Jake Paul, tease each other relentlessly, or simply make the machine do something it’s not supposed to, and feel slightly persecuted when a corporation gets in their way. To broaden this beyond edgelord teens, OpenAI has created an app in which, for many users, locating and trying to circumvent guardrails is the core experience, in a world where more permissive open source models with similar capabilities are probably just a few months behind. Best of luck with this dynamic!

Related

Sora and the Sloppy Future of AI

From Intelligencer - Daily News, Politics, Business, and Tech via this RSS feed