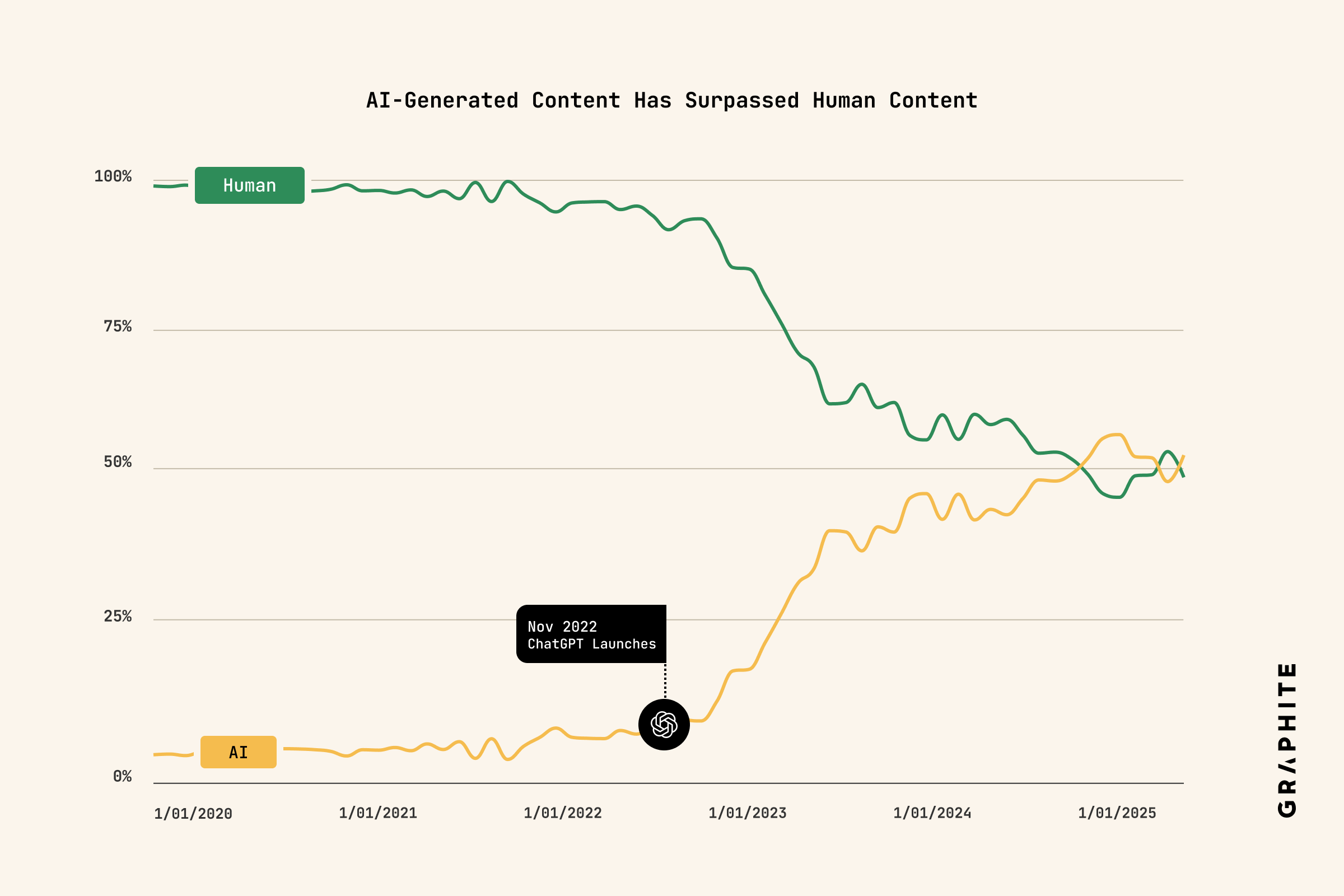

In a development that will surprise nobody who senses the rising tide of AI slop, new research indicates that AI content has now overtaken human-written work on the internet. But there’s a good-news twist. The slop seems to have plateaued.

Research outfit Five Percent has published a new white paper analysing online content published between January 2020 and May 2025. The results indicate that material created with machine learning tools initially overtook human-written content late last year.

However, the data also shows the proportion of AI-generated material plateauing. In fact, the rapid growth of AI content actually begins to slow in the data around mid-2023.

Two immediate questions spring from this. First, why is AI content plateauing? Second, how accurate is this study likely to be? Five Percent addresses both of these queries.

“While AI-generated articles grew dramatically after ChatGPT launched, we do not see that trend continuing. Instead, the proportion of AI-generated articles has remained relatively stable over the last 12 months. We hypothesize that this is because practitioners found that AI-generated articles do not perform well in search, as shown in a separate study,” the study says.

So, that’s a candidate explanation for the plateauing. Google is doing a half-decent job of weeding out AI slop, if you can believe that.

(Image credit: Five Percent)

But what of the accuracy? The study used just one AI detection algorithm, a free one from Surfer. That does not seem hugely robust. However, Five Percent says it tested the algorithm on 15,000 articles published between 2020 and 2022, which can largely be assumed to be human-written, and the result was an implied 4.2% false positive rate.

As for assessing false negatives, the authors used OpenAI’s GPT-4o to generate 6,009 articles and ran them through Surfer and found it correctly detected 99.4% of the articles as AI-written.

Five Percent explains their methodology in terms of how GPT-4o was prompted to generate the test articles, but that will obviously never fully simulate the huge range of prompts that will have been used in the real world. So, questions remain over the overall accuracy of the study.

Put another way, could the improvement of AI content mean that it’s harder to detect, and therefore explain the plateau effect? It’s hard to say for sure.

That said, it is at least plausible that Google is succeeding in suppressing at least some AI slop and that this alone is motivating content publishers to stick with more expensive humans.

If you’re wondering about my own sense of all this as a flesh-and-bones content generator, well, let’s just say that I’ve yet to feel the cold, dead hand of AI on my shoulder. And this study gives at least cause for some hope that it won’t inevitably all go in one direction from here.

From PCGamer latest via this RSS feed